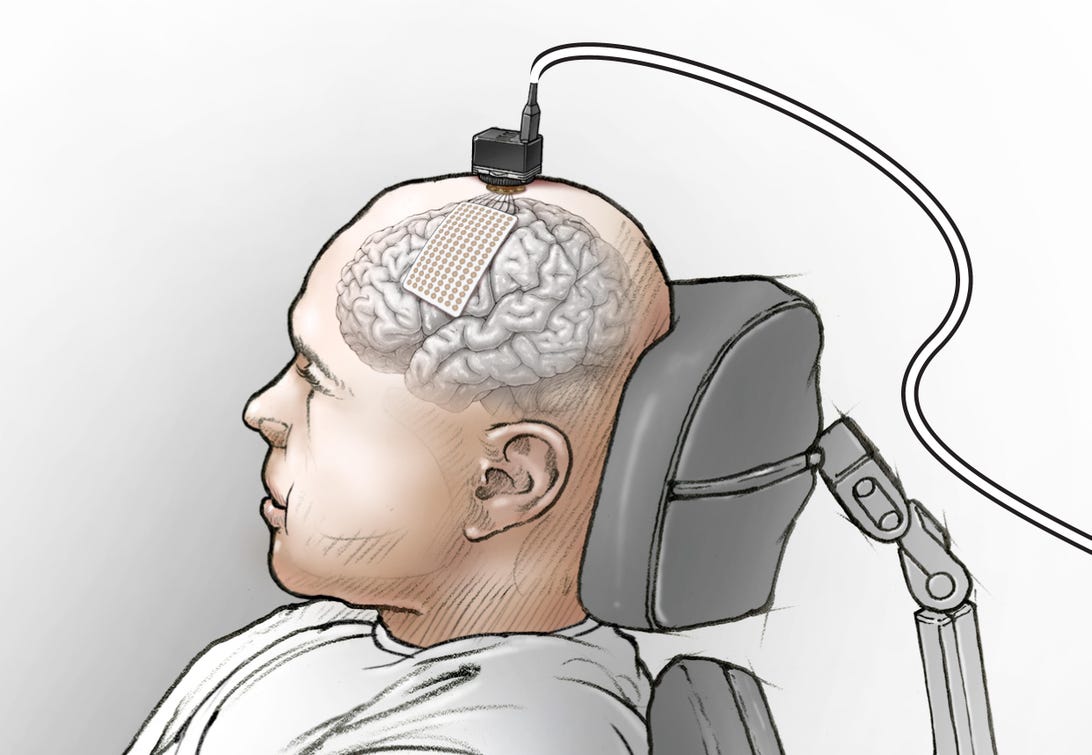

UCSF’s brain-computer interface is surgically applied directly to a patient’s motor cortex to enable communication.

Ken Probst, UCSF

Facebook’s work in neural input technology for AR and VR looks to be moving in a more wrist-based direction, but the company continues to invest in research on implanted brain-computer interfaces. The latest phase of a years-long Facebook-funded study from UCSF, called Project Steno, translates attempts at conversation from a speech-impaired paralyzed patient into words on a screen.

“This is the first time someone just naturally trying to say words could be decoded into words just from brain activity,” said Dr. David Moses, lead author of a study published Wednesday in the New England Journal of Medicine. “Hopefully, this is the proof of principle for direct speech control of a communication device, using intended attempted speech as the control signal by someone who cannot speak, who is paralyzed.”

Brain-computer interfaces (BCIs) have been behind a number of promising recent breakthroughs, including Stanford research that could turn imagined handwriting into projected text. UCSF’s study takes a different approach, analyzing actual attempts at speech and acting almost like a translator.

The study, run by UCSF neurosurgeon Dr. Edward Chang, involved implanting a “neuroprosthesis” of electrodes in a paralyzed man who had a brainstem stroke at age 20. With an electrode patch implanted over the area of the brain associated with controlling the vocal tract, the man attempted to respond to questions displayed on a screen. UCSF’s machine learning algorithms can recognize 50 words and convert these into real-time sentences. For instance, if the patient saw a prompt asking “How are you today?” the response appeared on screen as “I am very good,” popping up word by word.

Moses clarified that the work will aim to continue beyond Facebook’s funding phase and that the research still has a lot more work ahead. Right now it’s still unclear how much of the speech recognition comes from recorded patterns of brain activity, or vocal utterances, or a combination of both.

Moses is quick to clarify that the study, like other BCI work, isn’t mind reading: it relies on sensing brain activity that happens specifically when attempting to engage in a certain behavior, like speaking. Moses also says the UCSF team’s work doesn’t yet translate to non-invasive neural interfaces. Elon Musk’s Neuralink promises wireless transmission data from brain-implanted electrodes for future research and assistive uses, but so far that tech’s only been demonstrated on a monkey.

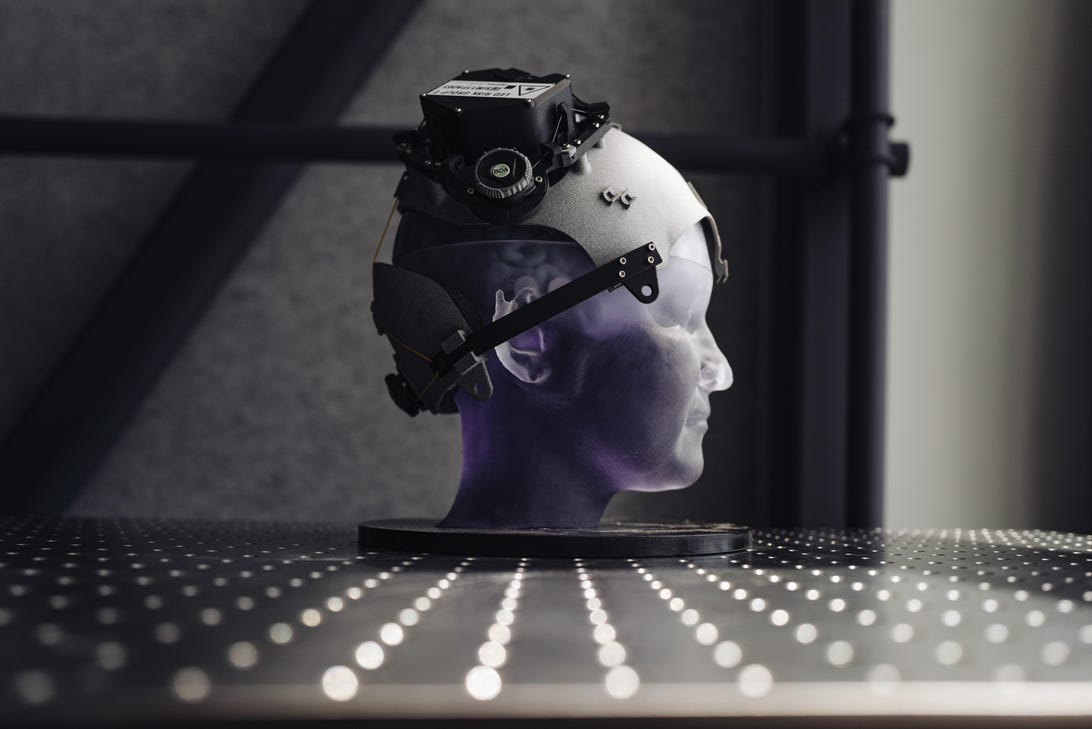

Facebook Reality Labs’ BCI head-worn device prototype, which didn’t have implanted electrodes, is going open-source.

Meanwhile, Facebook Reality Labs Research has shifted away from head-worn brain-computer interfaces for future VR/AR headsets, pivoting for the near future to focusing on wrist-worn devices based on the tech acquired from CTRL-Labs. Facebook Reality Labs had its own non-invasive prototype head-worn research headsets for studying brain activity, and the company has announced it plans to make these available for open-source research projects as it stops focus on head-mounted neural hardware. (UCSF receiving funding from Facebook but no hardware.)

“Aspects of the optical head mounted work will be applicable to our EMG research at the wrist. We will continue to use optical BCI as a research tool to build better wrist-based sensor models and algorithms. While we will continue to leverage these prototypes in our research, we are no longer developing a head mounted optical BCI device to sense speech production. That’s one reason why we will be sharing our head-mounted hardware prototypes with other researchers, who can apply our innovation to other use cases,” a Facebook representative confirmed via email.

Consumer-targeted neural input technology is still in its infancy, however. While consumer devices using noninvasive head or wrist-worn sensors exist, they’re far less accurate than implanted electrodes right now.

More Stories

3 Ways Technology Has Changed the World For Home Based Business Owners

The Growth of Technology

Computer Dektop Hack